The Virtual Unattended Store

Reinventing Retail: What’s in store for the future of “virtual” shopping?

The Virtual Unattended Store is a gamified, multimodal interactive online shopping experience

which brings the participant through a futuristic virtual online store that takes the explorative brick-and-mortar offline shopping experience to the next level.

Participant can interact with the virtual store and its products using their phone and gesture,

while learning about Mastercard’s AI, 5G, and Cloud Payment technologies spontaneously.

User's cellphone is used as a touch interface + controller to help them navigate the store, trigger scene changes or interactive contents on their desktop.

For example, tapping one's actual cellphone on the virtual card-reader in the game scene will “open” the virtual store gate,

moving one's phone towards an item in the desktop game scene can "scan and add that item to cart"….

Customers engage with the virtual store at a deeper level through hybrid and embodied interactions, leading to multiple wow moments in the journey.

I led the conceptualization, design, engineering, and go-to-market of this entire project.

It was launched mid 2021, won leadership support and has been implemented in Mastercard Experience Centers globally.

Work at Mastercard Labs | 2021 My Role: Concept Design, End to End Experience Design, VR Prototyping, Engineering, Project Lead Tools: Unity, Figma

The Vision

“How do we preserve, or even elevate the spontaneous joy of brick-and-mortar shopping,

in the next-gen online, digital shopping experiences?”

Background

Our project aims to understand the temporal quality of a film and transfer that into other media.

For each film, its temporality is examined at different levels of resolution---from the entire film, to a selected group of shots, to a single shot.

Then we try to reproduce the same temporality in a new context. In this case, architectural visualization is chosen as our application.

Ideation

We chose architectural documentary as initial input for the system to “learn” from

since we hope eventually, our algorithm can be applied to architecture visualization.

Also, compared to other genres (e.g. action, horror movies), the camera language of architectural documentary is easier to digitize.

User Flow

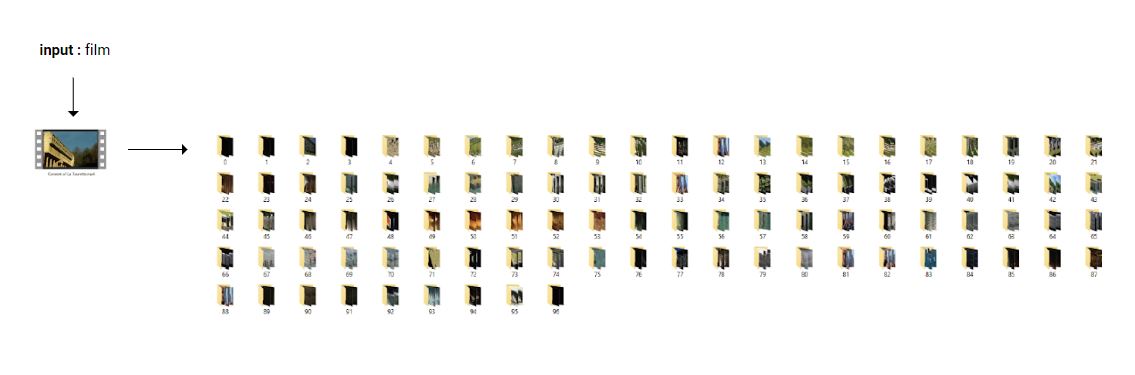

To understand the overall film structure, the first step is to identity the shot-cuts in a film. We were able to cut the film into

97 disparate shot groups and auto-save the screenshots within one continuous shot into each folder.

Then we classified the 97 shots into 4 categories according to the signature screenshot:

shots of the architecture itself, shots of the architect, shots of the architectural model, and other shots.

Among those, we are only interested in shots of the architecture itself.

Putting the above shot classification result along the timeline of the film, we got a summary of the overall film structure.

Mobile UX Design

As stated above, we’re only intrigued by shots about the architecture itself. Other shot groups were discarded.

Static diagram was produced for each shot to study its basic visual properties (light/color..) and their variation through time.

It can be seen that some shots are totally still, some have camera movement, while others have both camera and human motion.

Since it is hard for our software to analyze human motion, we decided to narrow down on the static shots as well as those

that only have camera movement in them.

That led us to choose shot 16-19, in that this shot group had a very tempting mix of the two types of shots we want.

Unity Prototyping

So we went on to analyze the temporality of shot 16-19, aiming to convert it into a digital format which can be reused in other contexts.

There are many dimensions of temporality in a film,

(e.g. how the lightness/color-tone shifts, how the sound changes, or how the camera moves during a period of time.)

Considering the tools we have as well as the future application we want, camera movement became our target subject for temporality.

Structure From Motion (SFM) was utilized to decipher the camera information in each shot. From a continuous group of frames,

the software was able to interpret the camera position, angle, and focal length in each viewport, then output the 3D camera point clouds.

It’s Live Now!

Yes this project did launch! (mid 2021). Try it out yourself at https://virtual.alpha.mastercardlabs.com

Enjoy!